Research

I was awarded a PhD in Computer Science from Oregon State University in June 2022 after spending a very long time there (including undergrad). Alan Fern was my advisor. My reseach generally focused on designing and building systems which performed extensive and demonstrably 'fair' evaluations of certain classes of complex machine learning algorithms. I also had many opportunities to contribute to other varied projects. Some of my work from those studies include:

-

Stake-Free Evaluation of Graph Networks for Spatio-Temporal Processes

Link

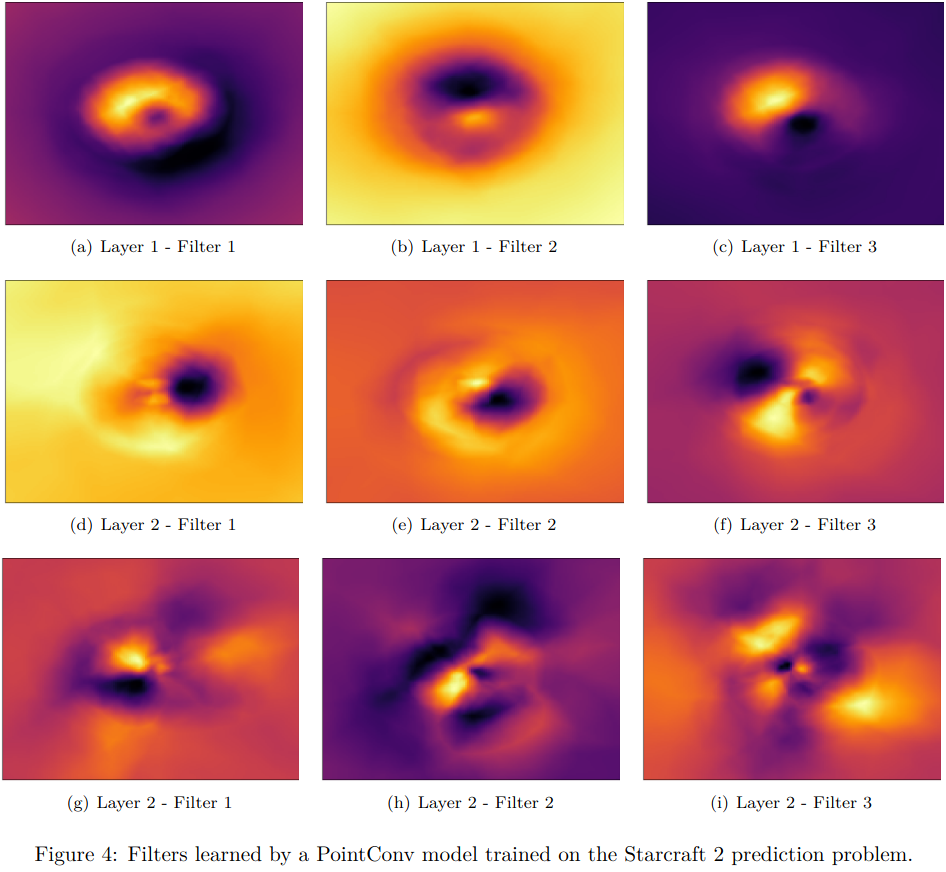

We propose a method for representing a spatio-temproal process as a graph, and investigate the behavior of different configurations of graph neural networks (GNNs) on three distinct prediction problem domains represented as such. Specifically, these domains forecasting and 'nowcasting' weather/atmospheric conditions using data from weather stations distributed across a US state, predicting road traffic flow and congestion, and predicting the future state of the units in a simulated battle from a custom Starcraft II scenario I created for this purpose.

Our findings show how GNN architectures with explicit inductive bias are generally more interpretable, and we demonstrate how their behavior can be inspected to infer meaningful insights about the process it was trained on. In contrast, the less biased GNN architectures which rely more on 'black box' machine learning components are difficult to interpret in a useful way, but they generally preform better at accurately predicting a processes' future states than their more biased counterparts.

This research involved training a large number of GNNs to cover every conbination of different architectures, different network sizes, and different problem domains. To address the difficulties with fairly training so many distinct GNNs, we develop and demonstrate a method to quickly automatically determine a good training hyperparameter configuration for any deep learning network. This allows us to ensure that our evaluation is fair, in that every GNN configuration recieved an equal amount of 'tuning' effort prior to training.

-

An empirical study of Bayesian optimization: acquisition versus partition

LinkWe performed an extensive analysis of two related families of Bayesian optimization algorithms: partitioning-driven, in which optimization is driven by iteratively dividing the function space into smaller regions based on previous observations; and acquisition-driven, which chooses which point to observe by optimizing a specific auxillary 'acquisition function' derived from the previous observations .

The evaluation resulted in two main findings. First, that acquisition-driven approaches ('traditional' Bayesian optimization) consistently outperformed partitioning-driven approaches, even when the partitioning algorithms were allowed many more iterations to represent their relative computational efficiency. This is likely due to the partitioning approaches' innate structural biases and overly general assumptions about the objective's shape which prevent them from achieving sufficient sample efficiency to consistently compete with the acquisition family.

Second, that acquisition approaches frequently used as representative baselines in other works (i.e. upper/lower confidence bound) varied significantly in performance depending on their hyperparameter configuration. This could result in these approaches performing especially poorly while being used to represent the entire family's expected performance if their specific configuration was not carefully determined. We showed that a well-known parameter-free acquisition function (i.e. expected improvement) performs reasonably well across all objective functions regardless of its configuration, suggesting that it should generally be preferred over the tunable acquisition functions as a representative baseline for such algorithms. -

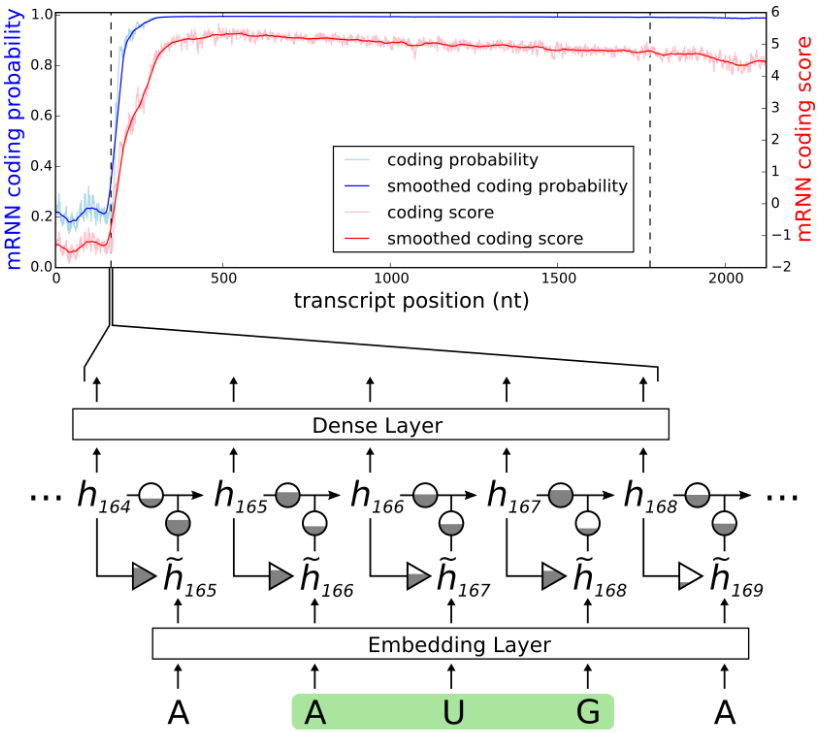

A deep recurrent neural network discovers complex biological rules to decipher RNA protein-coding potential

Link

We showed that RNNs could be used to predect the protein coding potential of RNA sequences. Our model outperformed the then-current state-of-the-art tools for predicting coding potential despite being trained on relatively few sequence data. Additionally, we were able to examine the trained model's behavior to suggest novel sequence patterns and features may be important in determining protein coding potential.

I worked with the primary authors, Steve and Rachael, on the technical and deep learning elements of the project. My role was to assist with implementing the RNN model, build its training and evaluation architecture, and contribute to initial analysis of the trained model's performance.

-

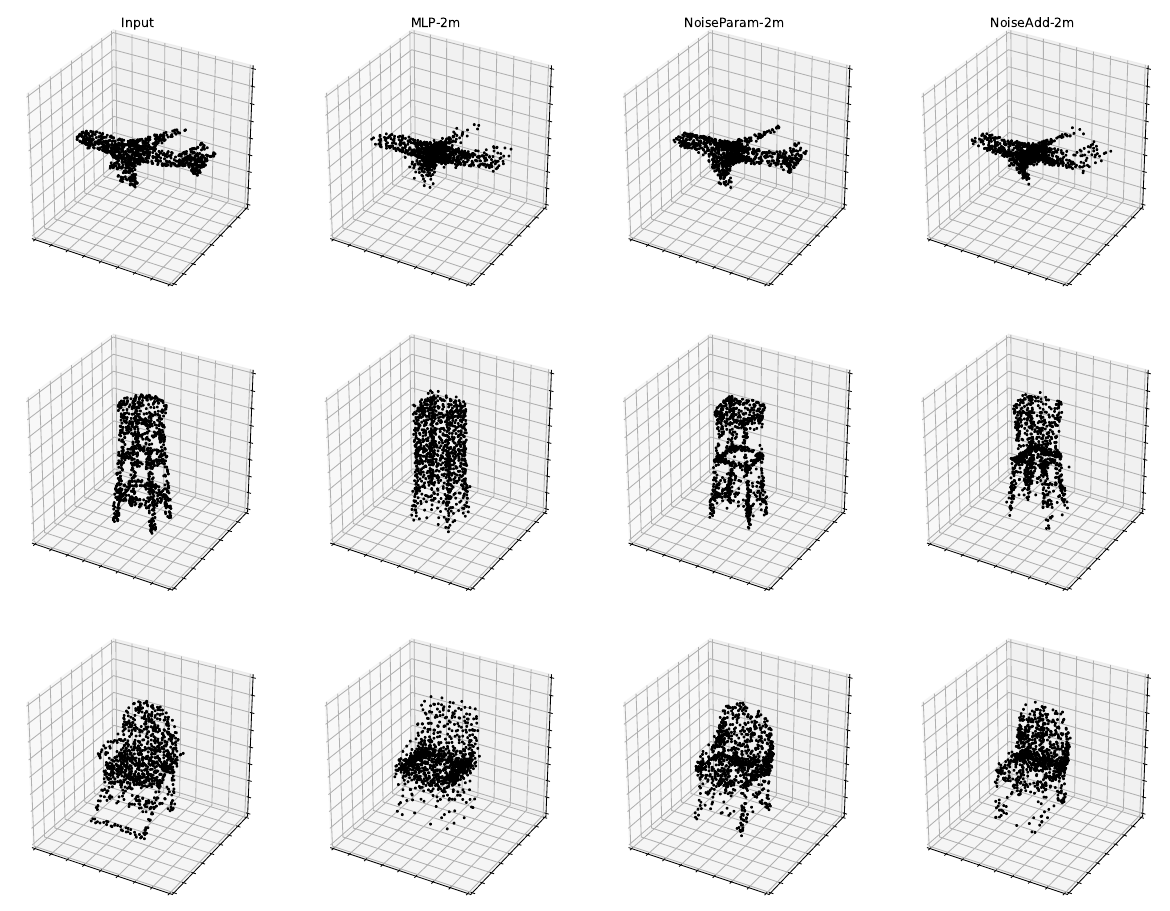

Sample-Based Point Cloud Decoder Networks

Link

We demonstrate that using 'deep set' neural network architecture and interpreting point clouds as unordered samples from some unknown underlying distribution results in an intuitive and effective deep learning approach for encoding and decoding shapes with point clouds. Our approach can both consume and produce point clouds with arbitrary numbers of points, unlike other similar approaches which impose constraints on the size of the input/output data. Additionally we show that the latent encoding produced by our approach can be examined to infer meaningful properties about the shape it represents, such as its overall complexity or the orientation of its primary and secondary axes.

This work was derived from a brief collaboration with HP researching how to improve 3D print process performance using dense point cloud scans of printed objects. Unfortunately the project ended before HP would experimentally validate our approach, so we changed the focus of the paper to general 3D shape encoding. Though the paper was not accepted for publications, I continued expanding this line fo research which would later become the primary focus of my thesis.

-

Eve: A Virtual Data Scientist (DARPA D3M Program)

Link LinkI was the research scientist for OSU's and CRA's collaboration participating in the DARPA D3M program. The program's goal was to build a modular metalearning system that could be used by a non-expert to automatically produce effective machine learning pipelines for the user-provided problem definition, data, and objectives. Our system used machine learning primitive 'building blocks' provided by other teams in the program to automatically construct, evaluate, and optimize full machine learning pipelines for previously unseen tasks as defined by a schema. It was designed to be used as a backend 'engine' for the full system's user interfaces (also provided by other teams).

Unfortunately after a year it became clear that a team as small as ours (just me + one part time CRA engineer) was unable to effectively contribute to the program, and we were dropped from the program when it was reconfigured a year in. As far as I'm aware the report linked above is the only surviving public evidence of our participation in this project.